AI 逆向艺术:解构梵高《罗纳河上的星夜》的数字之旅,华盛顿大学的创新突破

AI 技术再次突破界限,这次竟然能"解构"梵高的杰作!

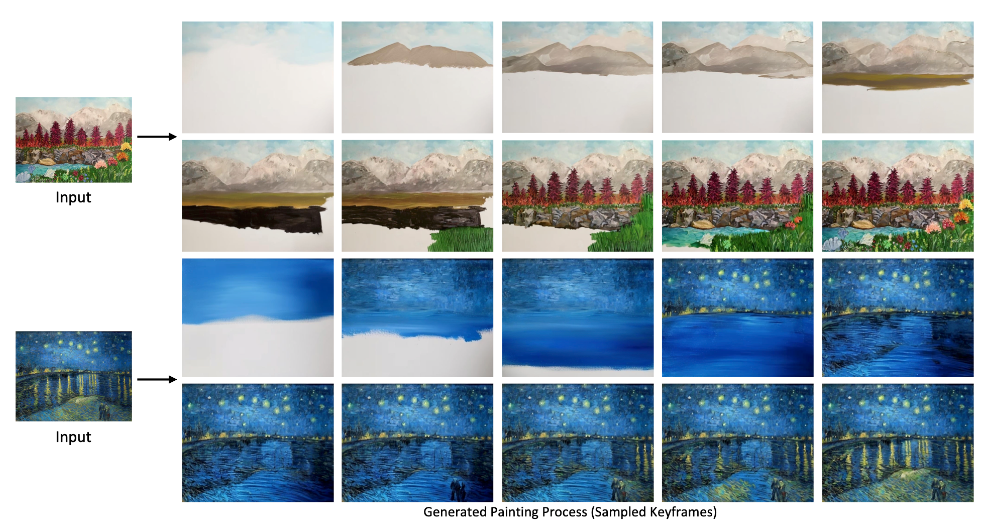

只需提供一幅原画,人工智能就能模仿梵高的笔触,逐步重现整幅画作的创作过程。

仔细对照两边,AI 几乎实现了 1:1 复刻,为我们展示了整幅图的构建过程。

这项令人惊叹的技术源自华盛顿大学的实验室,被命名为"Inverse Painting"。该项目的学术成果已经得到认可,即将在SIGGRAPH Asia 2024这一顶级会议上亮相。值得一提的是,研究团队中有两位华人学者:来自东北大学的Bowei Chen和毕业于上海科技大学的Yifan Wang,为这项突破性研究贡献了自己的力量。

深入技术细节

那么,这项技术是如何运作的呢?其实它背后有一个复杂的系统,利用基于扩散模型的逆绘画方法将输入图像转化为艺术作品。这个过程分为几个主要阶段:

1.学习绘画过程: 项目组收集了294个丙烯酸画的绘画视频,经过精细处理后,AI能够从中学习到真实艺术家的创作手法。

2.生成绘画指令: AI会分析不同元素(如天空、树木和人物)及其之间的关系,然后生成一系列指令,告诉自己下一步该画什么。

3.扩散模型生成图像: 利用这些指令,AI能够逐步添加细节,模拟出人类艺术家绘画的过程,一点一点构建出完整的作品。

4.文本与区域理解: 除了生成指令,AI还会创建区域掩码,确保绘画的每个元素都在正确的位置,从而提高了绘画的准确性和效果。

5.逐步渲染与时间控制: AI从一个空白画布开始,逐步生成完整的绘画顺序,同时模仿真实创作的时间间隔,让整幅画更具自然流畅感。

训练与测试流程

整个过程其实可以分为两个主要阶段:

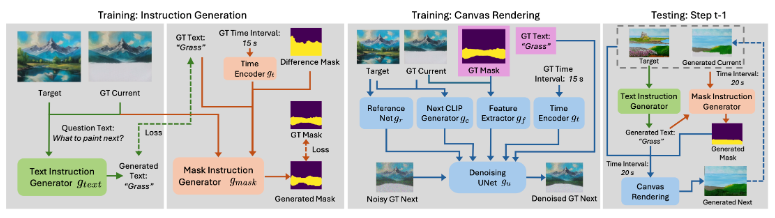

第一阶段:指令生成

这个阶段系统首先部署两个智能生成器。一个负责文字指令,另一个负责区域选择。文字指令生成器通过对比目标作品和当前画面,提出如"绘制天空"或"添加花朵"等具体建议。与此同时,区域选择生成器会创建一个二进制图像,精确标记需要修改的区域。这两个指令的结合确保了AI能在恰当的位置进行精准绘制。

第二阶段:画布渲染

画布更新阶段则是将前一阶段的指令付诸实践。这里采用了一种基于扩散模型的渲染技术,它能逐步从噪声中提炼出清晰的图像。在更新过程中,渲染器会综合考虑多方面因素,包括文字指令、区域选择、时间进程,以及目标作品与当前画面的特征对比。这种多维度的考量使得AI能更贴近人类艺术家的创作过程和风格。

第三阶段:测试

在测试阶段,AI系统展示了从零开始创作完整画作的能力。这个过程中有两个核心特点值得关注

首先是其连贯性。AI采用了一种自我参照的方法,每一笔都建立在前一笔的基础之上,这确保了整个创作过程的连贯性和逻辑性,就像人类艺术家精心构思每一个笔触一样。

其次是时间模拟,系统在每次画面更新之间设置了固定的时间间隔,这种设计巧妙地模拟了真实绘画中时间的流逝,使得AI的创作节奏更接近人类。

最终,与三个基线方法(Timecraft、Paint Transformer、Stable Video Diffusion)相比,其生成结果明显更优。

社区讨论与争议

这项技术一经在Reddit上公布,立即引发了广泛讨论。其中,最受欢迎的评论表达了对艺术家群体的担忧。

然而,也有不少人持积极态度。他们认为这种技术可能成为学习绘画的有力工具,为艺术爱好者提供宝贵的学习资源。像XXAI这个软件就可以给艺术爱好者提供很好的绘画思路。

结语

总而言之,华盛顿大学的“Inverse Painting”项目生动地展示了科技与艺术的奇妙结合,同时也给人们带来了思考。如何在利用AI增强创造力的同时保持人类独特的创意视角?如何在技术与艺术之间找到平衡?这些都是我们在拥抱这一激动人心的技术未来时需要深入探讨的话题。