Microsoft integra GPT4o com LlamaParse para melhorar o fluxo de trabalho de geração aumentada por recuperação (RAG)

Para melhorar a extração de dados não estruturados e analisar documentos multimodais, conectandose perfeitamente à base de dados vetorial Azure AI Search, a Microsoft está realizando uma integração revolucionária dos modelos avançados GPT4o da Azure OpenAI com LlamaParse Premium para construir um fluxo de trabalho completo de geração aumentada por recuperação (RAG).

O que é LlamaParse?

O Microsoft LlamaParse é uma ferramenta projetada especificamente para a inteligência artificial generativa (GenAI). Sua principal função é analisar e limpar diversos documentos para garantir uma boa qualidade dos dados antes de transmitir esses dados a modelos de linguagem maiores (LLMs).

O LlamaParse combina técnicas heurísticas e aprendizado de máquina para extrair pontos de dados relevantes de parágrafos de texto ou ocultos em tabelas.

O LlamaParse possui as seguintes características destacadas:

Saída em Markdown: Converte as informações extraídas em um formato de texto fácil de ler.

Suporte a LaTeX: Ideal para documentos acadêmicos ou técnicos que exigem símbolos matemáticos.

Alta precisão: Utiliza IA para minimizar erros humanos nas tarefas de extração de dados.

Novo Ponto de Extremidade Azure OpenAI

O Azure AI Search serve como a espinha dorsal para gerenciar e embutir os dados tratados.

Como funciona:

- Analisar dados: Utilize o LlamaParse para converter dados não estruturados em formatos estruturados.

- Embutir: Envie dados estruturados ao armazenamento vetorial do Azure AI Search para consultas eficientes.

- Recuperar: Implemente técnicas avançadas como reordenação semântica para garantir que os usuários recebam os resultados de pesquisa mais relevantes.

Com essa integração, os usuários podem aproveitar os modelos da série GPT4o da Azure OpenAI para extrair dados não estruturados e converter documentos. Essa integração maximiza as forças de ambas as partes: o LlamaParse é responsável pela análise eficiente, enquanto a Azure OpenAI fornece capacidades poderosas de modelos de linguagem, alcançando, em última análise, um processamento documental mais preciso e inteligente.

O que a integração do GPT4o e do LlamaParse significa para os fluxos de trabalho de IA?

Essa integração combina duas ferramentas poderosas: LlamaParse Premium e Azure AI Search.

O LlamaParse é amplamente reconhecido por suas potentes capacidades de análise de documentos, capaz de extrair e construir dados a partir de diversos documentos complexos, desde PDFs até arquivos Excel. Ele utiliza modelos multimodais avançados que podem lidar não apenas com texto, mas também interpretar conteúdo visual como gráficos e diagramas. Isso significa que, quer você esteja extraindo informações de relatórios detalhados ou analisando gráficos de desempenho de marketing, o LlamaParse pode oferecer suporte.

Assim como as ferramentas XXAI, que reúnem 13 modelos populares de IA em uma única plataforma para fornecer soluções integradas aos usuários. Os usuários podem alternar sem esforço entre o processamento de texto e a geração de imagens, recebendo suporte!

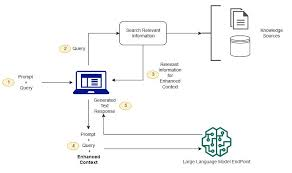

Construindo um Fluxo de Trabalho Completo de RAG

O LlamaParse conectase diretamente aos modelos GPT4o e GPT4omini da Azure OpenAI. Com o suporte multimodal da Azure OpenAI, os usuários podem combinar LlamaCloud, Azure AI Search e Azure OpenAI para criar um fluxo de trabalho completo de RAG.

Vamos examinar as etapas específicas:

Parsing e Enriquecimento: Use LlamaParse Premium e Azure OpenAI para a extração avançada de documentos, gerando saídas otimizadas para LLM em vários formatos, como Markdown, LaTeX e diagramas Mermaid.

Chunking e Embutimento: Use o Azure AI Search como armazenamento vetorial e aproveite os modelos de incorporação disponíveis no catálogo de modelos de IA da Azure para segmentar, embutir e indexar os conteúdos analisados.

Pesquisa e Geração: Aproveite a reformulação de consultas e a reordenação semântica do Azure AI Search para melhorar a qualidade da pesquisa. Por fim, você orquestrará o Azure AI Search e a Azure OpenAI através do Llamaindex para criar aplicações de IA generativa.

Segurança Empresarial e Conformidade

Para a Microsoft, a segurança é de extrema importância, especialmente ao lidar com dados sensíveis de empresas. Essas ferramentas operam sob os altos padrões de criptografia de dados em trânsito e em repouso da Azure e estão em conformidade com regulamentações como GDPR e HIPAA.

Além disso, essas ferramentas de IA oferecem flexibilidade, permitindo que os desenvolvedores escolham configurações personalizadas para atender às necessidades da organização. Portanto, essa ferramenta é adequada para cargas de trabalho sensíveis, sem preocupações com questões de segurança.