What is an Artificial Intelligence Chip?

Artificial intelligence (AI) chips are computer microchips specifically designed for creating AI systems. Unlike traditional chips, the purpose of AI chips is to efficiently handle complex AI tasks such as machine learning (ML), data analysis, and natural language processing (NLP).

The term "AI chip" encompasses a wide range of chip types that can quickly meet the unique computational needs of AI algorithms, including graphics processing units (GPUs), field-programmable gate arrays (FPGAs), and application-specific integrated circuits (ASICs). Although central processing units (CPUs) can also handle simple AI tasks, their role has gradually diminished in modern developments.

How Do AI Chips Work?

AI chips are actually microchips made from semiconductor materials and contain a large number of small switches (transistors) used to control the flow of electricity and to perform memory and logic calculations. Memory chips manage data storage and retrieval, while logic chips serve as the core for data operations. AI chips focus on processing dense data workloads, which exceed the performance limits of traditional CPUs. To achieve this, AI chips integrate more, faster, and more efficient transistors, improving their performance in terms of energy consumption.

AI chips also possess unique features that can significantly accelerate the computations required for AI algorithms. This includes parallel processing—meaning they can perform multiple computations simultaneously. Parallel processing is crucial in artificial intelligence as it allows for multiple tasks to be executed at the same time, enabling faster and more effective handling of complex calculations.

Types of Artificial Intelligence Chips

Different types of AI chips vary in hardware and functionality:

- GPU (Graphics Processing Unit): GPUs are most commonly used for training AI models. These chips are widely used due to their ability to process graphics quickly, especially in training AI models. They are often connected together to synchronize the training of AI systems.

- FPGA (Field-Programmable Gate Array): FPGAs are very useful in the application of AI models because they can be "instantaneously" reprogrammed, making them well-suited for executing different tasks, particularly those related to image and video processing.

- ASIC (Application-Specific Integrated Circuit): ASICs are accelerator chips designed specifically for certain tasks. They provide extremely high performance, but cannot be reprogrammed, usually offering advantages over general-purpose processors and other AI chips. A typical example is Google's Tensor Processing Unit, which is specifically designed to optimize machine learning performance.

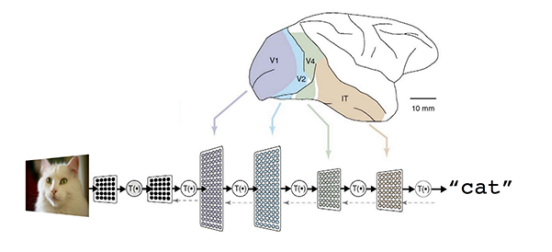

- NPU (Neural Processing Unit): NPUs are modern components that enable CPUs to handle AI workloads. Similar to GPUs, their design purpose is more focused on building deep learning models and neural networks. Therefore, NPUs excel at processing large amounts of data to perform a range of advanced AI tasks, such as object detection, speech recognition, and video editing. Due to their powerful functionality, NPUs typically outperform GPUs in AI processing.

Applications of AI Chips

Without these specially designed AI chips, many advances in modern artificial intelligence would be impossible. Here are several practical applications:

Large Language Models

AI chips accelerate the training and improvement speed of artificial intelligence, machine learning, and deep learning algorithms, which is particularly useful for the development of large language models (LLMs). They can utilize parallel processing to sequence data and optimize the operations of neural networks, thereby enhancing the performance of LLMs, improving chatbots, AI assistants, and text generators.

Edge Artificial Intelligence

Nearly all smart devices (such as smartwatches and smart home products) rely on AI chips, enabling them to process information at the point of data generation without the need to send data to the cloud, making usage faster, safer, and more energy-efficient.

Autonomous Vehicles

AI chips support autonomous vehicles' processing of massive data collected from sensors like LiDAR and cameras, facilitating complex tasks such as image recognition. They enable real-time decision-making capabilities, greatly enhancing vehicle intelligence.

Robotics

AI chips can be used for various machine learning and computer vision tasks, allowing different robots to perceive and respond to their environment more effectively. This can apply to all fields of robotics, from collaborative robots used for harvesting crops to humanoid robots providing companionship.

Why are AI Chips Important?

With the rapid development of the artificial intelligence industry, specialized AI chips have become key to creating various AI solutions. Compared to older CPUs or chips, modern AI chips have significantly improved in speed, flexibility, efficiency, and performance.

Faster Processing Speed: Modern AI chips employ new computing methods, capable of executing thousands to millions of calculations simultaneously, which greatly enhances processing speed.

Higher Flexibility: AI chips have greater customizability, allowing for specialized designs that cater to the needs of different application areas, significantly promoting innovation and development in AI.

More Efficient: Modern AI chips also display significant reductions in energy consumption, making them more environmentally friendly in resource-intensive environments (such as data centers).

Better Performance: Because AI chips are designed for specific tasks, they often provide more accurate results when performing specific AI-related tasks.

Why Are AI Chips Better than Ordinary Chips?

In the development and deployment of artificial intelligence, AI chips are far superior to ordinary chips, primarily due to their unique design features. AI chips possess parallel processing capabilities, whereas the main difference between general-purpose chips (like CPUs) and AI chips lies in their computation methods: general-purpose chips adopt sequential processing, while AI chips utilize parallel processing. This enables AI chips to solve multiple small problems simultaneously, leading to faster and more efficient processing.

AI Chips Are More Energy Efficient

AI chip designs are more energy-efficient, employing low-precision algorithms and using fewer transistors for calculations, thus reducing energy consumption. Additionally, because they excel in parallel processing, AI chips can allocate workloads more effectively, further lowering energy use. This helps to reduce the carbon footprint of the AI industry in data centers. AI chips also make edge AI devices more efficient, such as mobile phones needing optimized AI chips to process personal data without draining battery life.

AI Chip Results Are More Accurate

Because AI chips are specifically designed for artificial intelligence, they tend to execute AI-related tasks, such as image recognition and natural language processing, more accurately than ordinary chips. Their purpose is to accurately perform complex calculations involved in AI algorithms, reducing the likelihood of errors. This makes AI chips the go-to choice for many high-risk AI applications, such as medical imaging and autonomous vehicles, where quick precision is critical.

AI Chips Are Customizable

Unlike general-purpose chips, AI chips (like FPGAs and ASICs) can be customized for specific AI models or applications, adapting to different tasks. This customization includes fine-tuning model parameters and optimizing chip architecture, which is vital for the development of AI, helping developers adjust hardware according to unique needs to accommodate variations in algorithms, data types, and computational demands.

The Future of Artificial Intelligence Chips

Although AI chips play a crucial role in enhancing intelligent technology, their future faces some challenges, including supply chain bottlenecks, geopolitical instability, and computational limitations. Currently, Nvidia holds approximately 80% of the global GPU market, becoming a major supplier of AI hardware and software, but its monopoly position has sparked controversy. Nvidia, Microsoft, and OpenAI have all come under scrutiny for potentially violating U.S. antitrust laws. Recently, startup Xockets has accused Nvidia of patent theft and antitrust violations.

Frequently Asked Questions

What is the difference between a CPU and a GPU?

A CPU (central processing unit) is a general-purpose chip that can handle various tasks within a computer system, including running the operating system and managing applications. A GPU (graphics processing unit) is also general-purpose but is typically used to perform parallel processing tasks. They are best suited for rendering images, running video games, and training AI models.

How much does an AI chip cost?

The cost of AI chips varies, depending on factors like performance. For example, AMD's MI300X chip costs between $10,000 and $15,000, while Nvidia's H100 chip ranges from $30,000 to $40,000.

Which companies produce AI chips?

While Nvidia is the market leader, technology giants like Microsoft, Google, Intel, and IBM are also competing in the production of AI chips.

Summary

AI chips not only surpass traditional chips in speed and performance but also represent the main driving force behind the rapid development of modern artificial intelligence due to their flexibility, efficiency, and specialization. Looking to the future, the continued innovation and development of AI chips will undoubtedly have profound impacts across various industries. XXAI, as an application powered by advanced models such as GPT-4, Claude 3, and DALL-E 3, fully utilizes the powerful capabilities of AI chips and can seamlessly integrate into various applications and websites to enhance writing, communication, and productivity. As we look ahead, the ongoing innovation and development of AI chips will deeply influence numerous industries.