¿Qué es un chip de inteligencia artificial?

Los chips de inteligencia artificial (IA) son microchips de computadoras diseñados específicamente para crear sistemas de IA. A diferencia de los chips tradicionales, el propósito de los chips de IA es manejar de manera eficiente tareas complejas de IA como el aprendizaje automático (ML), el análisis de datos y el procesamiento de lenguaje natural (NLP).

El término "chip de IA" abarca una amplia gama de tipos de chips que pueden satisfacer rápidamente las necesidades computacionales únicas de los algoritmos de IA, incluidos los unidades de procesamiento gráfico (GPU), las matrices de puertas programables en campo (FPGA) y los circuitos integrados de aplicación específica (ASIC). Aunque las unidades centrales de procesamiento (CPU) también pueden manejar tareas de IA simples, su papel ha disminuido gradualmente en los desarrollos modernos.

¿Cómo funcionan los chips de IA?

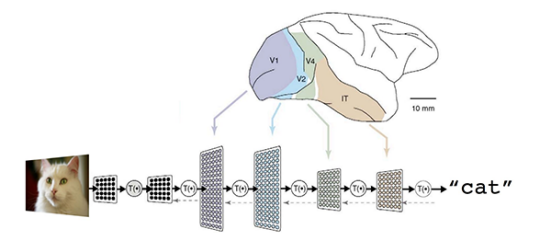

Los chips de IA son, en realidad, microchips hechos de materiales semiconductores que contienen un gran número de pequeños interruptores (transistores) utilizados para controlar el flujo de electricidad y realizar cálculos de memoria y lógica. Los chips de memoria gestionan el almacenamiento y la recuperación de datos, mientras que los chips lógicos actúan como el núcleo para las operaciones de datos. Los chips de IA se enfocan en procesar cargas de trabajo de datos densas que superan los límites de rendimiento de las CPU tradicionales. Para lograr esto, los chips de IA integran transistores más numerosos, rápidos y eficientes, mejorando su rendimiento en términos de consumo de energía.

Los chips de IA también tienen características únicas que pueden acelerar significativamente los cálculos requeridos por los algoritmos de IA. Esto incluye el procesamiento en paralelo, lo que significa que pueden realizar múltiples cálculos simultáneamente. El procesamiento en paralelo es crucial en la inteligencia artificial, ya que permite que se ejecuten múltiples tareas al mismo tiempo, facilitando un manejo más rápido y efectivo de cálculos complejos.

Tipos de chips de inteligencia artificial

Los diferentes tipos de chips de IA varían en hardware y funcionalidad:

- GPU (Unidad de Procesamiento Gráfico): Las GPU son más comúnmente utilizadas para entrenar modelos de IA. Estos chips son ampliamente utilizados debido a su capacidad para procesar gráficos rápidamente, especialmente en el entrenamiento de modelos de IA. A menudo se conectan entre sí para sincronizar el entrenamiento de sistemas de IA.

- FPGA (Matriz de Puertas Programables en Campo): Las FPGA son muy útiles en la aplicación de modelos de IA porque pueden ser "reprogramadas instantáneamente", lo que las hace adecuadas para ejecutar diferentes tareas, especialmente en el procesamiento de imágenes y videos.

- ASIC (Circuito Integrado de Aplicación Específica): Los ASIC son chips aceleradores diseñados específicamente para ciertas tareas. Proporcionan un rendimiento extremadamente alto, pero no pueden ser reprogramados, lo que generalmente les otorga ventajas sobre los procesadores de propósito general y otros chips de IA. Un ejemplo típico es la Unidad de Procesamiento Tensorial de Google, que está diseñada específicamente para optimizar el rendimiento del aprendizaje automático.

- NPU (Unidad de Procesamiento Neural): Las NPU son componentes modernos que permiten que las CPU manejen cargas de trabajo de IA. Al igual que las GPU, su diseño está más enfocado en construir modelos de aprendizaje profundo y redes neuronales. Por lo tanto, las NPU sobresalen en procesar grandes cantidades de datos para realizar una variedad de tareas avanzadas de IA, como detección de objetos, reconocimiento de voz y edición de video. Debido a su potente funcionalidad, las NPU suelen superar a las GPU en el procesamiento de IA.

Aplicaciones de los chips de IA

Sin estos chips de IA diseñados específicamente, muchos avances en la inteligencia artificial moderna serían imposibles. Aquí hay varias aplicaciones prácticas:

Modelos de lenguaje grande

Los chips de IA aceleran la velocidad de entrenamiento y mejora de técnicas de inteligencia artificial, aprendizaje automático y algoritmos de aprendizaje profundo, lo que resulta particularmente útil en el desarrollo de modelos de lenguaje grande (LLM). Pueden utilizar el procesamiento en paralelo para secuenciar datos y optimizar las operaciones de las redes neuronales, mejorando así el rendimiento de los LLM y mejorando los chatbots, asistentes de IA y generadores de texto.

Inteligencia Artificial en el borde

Casi todos los dispositivos inteligentes (como relojes inteligentes y productos para el hogar inteligente) dependen de los chips de IA, lo que les permite procesar información en el punto de generación de datos sin necesidad de enviar datos a la nube, haciendo que el uso sea más rápido, seguro y eficiente en energía.

Vehículos autónomos

Los chips de IA apoyan a los vehículos autónomos en el procesamiento de datos masivos recolectados de sensores como LiDAR y cámaras, facilitando tareas complejas como el reconocimiento de imágenes. Permiten capacidades de toma de decisiones en tiempo real, mejorando enormemente la inteligencia del vehículo.

Robótica

Los chips de IA pueden ser utilizados para varias tareas de aprendizaje automático y visión por computadora, permitiendo a los diferentes robots percibir y responder a su entorno de manera más efectiva. Esto puede aplicarse a todos los campos de la robótica, desde robots colaborativos utilizados para cosechar cultivos hasta robots humanoides que proporcionan compañía.

¿Por qué son importantes los chips de IA?

Con el rápido desarrollo de la industria de la inteligencia artificial, los chips de IA especializados se han convertido en la clave para crear diversas soluciones de IA. En comparación con los CPUs o chips más antiguos, los chips de IA modernos han mejorado significativamente en velocidad, flexibilidad, eficiencia y rendimiento.

- Mayor velocidad de procesamiento: Los chips de IA modernos utilizan nuevos métodos de computación que son capaces de ejecutar miles a millones de cálculos simultáneamente, lo que mejora enormemente la velocidad de procesamiento.

- Mayor flexibilidad: Los chips de IA tienen una mayor personalización, lo que permite diseños especializados que se adaptan a las necesidades de diferentes áreas de aplicación, promoviendo significativamente la innovación y el desarrollo en la IA.

- Más eficientes: Los chips de IA modernos también muestran reducciones significativas en el consumo de energía, lo que los hace más amigables con el medio ambiente en entornos de alto consumo de recursos (como centros de datos).

- Mejor rendimiento: Debido a que los chips de IA están diseñados para tareas específicas, a menudo proporcionan resultados más precisos al ejecutar tareas relacionadas con la IA.

¿Por qué los chips de IA son mejores que los chips comunes?

En el desarrollo y despliegue de la inteligencia artificial, los chips de IA son muy superiores a los chips comunes, principalmente debido a sus características únicas de diseño. Los chips de IA poseen capacidades de procesamiento en paralelo, mientras que la principal diferencia entre los chips de propósito general (como los CPU) y los chips de IA radica en sus métodos de computación: los chips de propósito general adoptan el procesamiento secuencial, mientras que los chips de IA utilizan el procesamiento en paralelo. Esto permite que los chips de IA resuelvan múltiples problemas pequeños al mismo tiempo, lo que lleva a un procesamiento más rápido y eficiente.

Los chips de IA son más eficientes en energía

Los diseños de chips de IA son más eficientes en términos de energía, utilizando algoritmos de baja precisión y empleando menos transistores para cálculos, lo que reduce el consumo de energía. Además, debido a que sobresalen en el procesamiento en paralelo, los chips de IA pueden asignar cargas de trabajo de manera más efectiva, lo que reduce aún más el uso de energía. Esto ayuda a disminuir la huella de carbono de la industria de la IA en los centros de datos. Los chips de IA también hacen que los dispositivos de IA en el borde sean más eficientes, como los teléfonos móviles que necesitan chips de IA optimizados para procesar datos personales sin agotar la duración de la batería.

Los resultados de los chips de IA son más precisos

Dado que los chips de IA están diseñados específicamente para la inteligencia artificial, tienden a ejecutar tareas relacionadas con la IA, como el reconocimiento de imágenes y el procesamiento de lenguaje natural, de manera más precisa que los chips comunes. Su propósito es ejecutar con precisión los cálculos complejos involucrados en los algoritmos de IA, lo que reduce la probabilidad de errores. Esto convierte a los chips de IA en la opción preferida para muchas aplicaciones de IA de alto riesgo, como la imagen médica y los vehículos autónomos, donde la rapidez y la precisión son cruciales.

Los chips de IA son personalizables

A diferencia de los chips de propósito general, los chips de IA (como los FPGA y ASIC) pueden personalizarse para modelos o aplicaciones de IA específicas, adaptándose a diferentes tareas. Esta personalización incluye el ajuste de parámetros del modelo y la optimización de la arquitectura del chip, lo cual es vital para el desarrollo de la IA, ayudando a los desarrolladores a ajustar el hardware según las necesidades únicas para acomodar variaciones en algoritmos, tipos de datos y demandas de computación.

El futuro de los chips de inteligencia artificial

Aunque los chips de IA juegan un papel crucial en la mejora de la tecnología inteligente, su futuro enfrenta algunos desafíos, incluidos cuellos de botella en la cadena de suministro, inestabilidad geopolítica y limitaciones computacionales. Actualmente, Nvidia ocupa aproximadamente el 80% del mercado global de GPU, convirtiéndose en un importante proveedor de hardware y software de IA, pero su posición de monopolio ha desatado controversia. Nvidia, Microsoft y OpenAI han sido objeto de escrutinio por la posible violación de las leyes antimonopolio de EE. UU. Recientemente, la startup Xockets ha acusado a Nvidia de robo de patentes y violaciones antimonopolio.

Preguntas Frecuentes

¿Cuál es la diferencia entre una CPU y una GPU? Una CPU (unidad central de procesamiento) es un chip de propósito general que puede manejar varias tareas dentro de un sistema de computación, incluyendo la ejecución del sistema operativo y la gestión de aplicaciones. Una GPU (unidad de procesamiento gráfico) también es de propósito general, pero generalmente se utiliza para realizar tareas de procesamiento en paralelo. Son más adecuadas para renderizar imágenes, ejecutar videojuegos y entrenar modelos de IA.

¿Cuánto cuesta un chip de IA? El costo de los chips de IA varía, dependiendo de factores como el rendimiento. Por ejemplo, el chip MI300X de AMD cuesta entre $10,000 y $15,000, mientras que el chip H100 de Nvidia oscila entre $30,000 y $40,000.

¿Qué empresas producen chips de IA? Si bien Nvidia es el líder del mercado, gigantes tecnológicos como Microsoft, Google, Intel e IBM también compiten en la producción de chips de IA.

Resumen

Los chips de IA no solo superan a los chips tradicionales en velocidad y rendimiento, sino que también representan la principal fuerza impulsora detrás del rápido desarrollo de la inteligencia artificial moderna debido a su flexibilidad, eficiencia y especialización. A medida que miramos hacia el futuro, la innovación y el desarrollo continuo de los chips de IA sin duda tendrán un impacto profundo en diversas industrias. XXAI, como una aplicación impulsada por modelos avanzados como GPT-4, Claude 3 y DALL-E 3, aprovecha plenamente las capacidades poderosas de los chips de IA y puede integrarse sin problemas en diversas aplicaciones y sitios web para mejorar la escritura, la comunicación y la productividad. A medida que avanzamos hacia el futuro, la continua innovación y el desarrollo de los chips de IA influirán profundamente en numerosas industrias.