Difference Between Gemini 1.5 Flash and Gemini 1.5 Pro

Google's Gemini series of large language models (LLMs) has taken the AI world by storm. Gemini 1.5 has further advanced artificial intelligence with its new features and capabilities. The two main versions of the current release (Pro and Flash) have garnered significant attention. This article will delve into the differences between Gemini 1.5 Pro and Flash to help you choose the version that best suits your needs.

Evolution of Google Gemini Models

Since its launch, Google's Gemini models have undergone numerous updates and enhancements. With each update, Google strives to enhance the performance and capabilities of the Gemini models, making them more powerful and versatile.

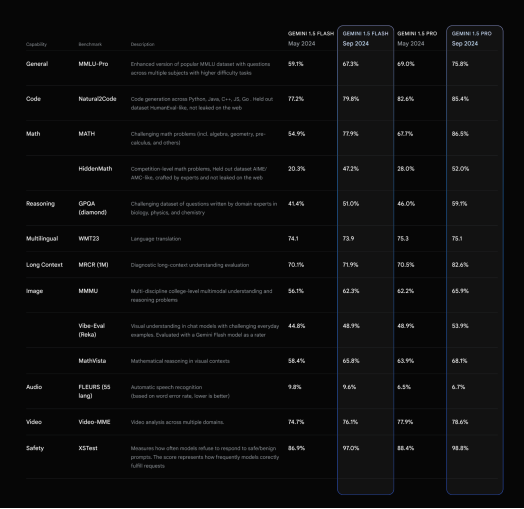

On September 24th, Google released two updated production-ready Gemini AI models: the new Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002 versions. These new models have significant improvements over their predecessors, promising more powerful features and faster speeds while reducing costs. In a series of benchmarks, these models have shown advancements in mathematical, long-context, and visual tasks. Based on our newest experimental model versions, these models have seen significant enhancements since the Gemini 1.5 model released in May at Google I/O.

The continuous development and improvement of Gemini models reflect Google's commitment to advancing the AI field. By incorporating user feedback and leveraging the latest advances in AI research, Google offers a series of powerful and innovative models under the Gemini framework.

Exploring Gemini 1.5 Flash

Gemini 1.5 Flash is a lightweight model optimized for speed and efficiency. It excels in handling large-scale, high-volume, high-frequency tasks, making it ideal for applications requiring fast processing and high scalability. With speed optimization, Gemini 1.5 Flash delivers impressive performance while maintaining efficiency. It can perform multi-modal reasoning on vast amounts of information and delivers high-quality results. Some of the use cases where the new Gemini 1.5 Flash model excels include summarization, chat applications, image and video captioning, extracting data from lengthy documents and tables, and processing hours of audio content.

Gemini 1.5 Flash employs a process called “distillation,” where it is trained by Gemini 1.5 Pro to transfer the most crucial knowledge and skills from a larger model to a smaller, more efficient one. This ensures that Gemini 1.5 Flash remains lightweight and efficient while maintaining high performance.

Exploring Gemini 1.5 Pro

Gemini 1.5 Pro is designed to handle complex tasks that require advanced reasoning and analysis. It offers enhanced functionality and features, making it a powerful tool for developers dealing with AI projects with complex requirements. With a longer context window, Gemini 1.5 Pro can handle more comprehensive and detailed reasoning, enabling it to precisely manage complex tasks. It also integrates with AI Studio and a broad range of ethical guidelines, providing developers with the tools and resources needed to build responsible and ethical AI applications. Additionally, 1.5 Pro is now integrated into a variety of Google products, including Gemini Advanced and Workspace apps, making it easier for developers to access and utilize this powerful generative AI tool.

For developers and enterprise customers looking to push the boundaries of artificial intelligence and solve complex problems through advanced reasoning and analysis, Gemini 1.5 Pro is the top choice.

Comparing Gemini 1.5 Flash and Pro

The Gemini 1.5 series models are designed to achieve general performance across various text, code, and multi-modal tasks. Gemini 1.5 comes in two versions: Gemini 1.5 Flash and Gemini 1.5 Pro. Although both models offer advanced features and enhancements, there are notable differences between the two.

Gemini 1.5 Flash

- Key Features: Optimized for speed and efficiency

- Use Cases: Summarization, chat applications, image and video captioning, extracting data from lengthy documents and tables

Gemini 1.5 Pro

- Key Features: Enhanced capability to execute complex tasks

- Use Cases: Long-context reasoning, AI Studio integration, broad ethics, audio and image understanding

With the latest updates, 1.5 Pro and Flash now perform better, faster, and more cost-efficiently in production environments. We see an approximate 7% improvement in MMLU-Pro, a more challenging version of the popular MMLU benchmark. In the MATH and HiddenMath benchmarks (a set of internally reserved competitive math problems), both models improved significantly, around 20%. For visual and code use cases, evaluations measuring visual comprehension and Python code generation showed better performance for both models (ranging from about 2-7%).

Google claims that these models now provide more helpful responses while maintaining content safety standards. The company improved the model output format based on developer feedback, aiming for more precise and cost-effective usage. For tasks like summarization, Q&A, and extraction, the output length of updated models is approximately 5-20% shorter than previous models.

Users can access the new Gemini models via Google AI Studio, Gemini API, and Vertex AI (for Google Cloud customers). The chat-optimized version of Gemini 1.5 Pro-002 will soon be available to Gemini Advanced users. New pricing for prompts below 128,000 tokens will take effect on October 1, 2024. With context caching, Google expects Gemini's development costs to be reduced further.

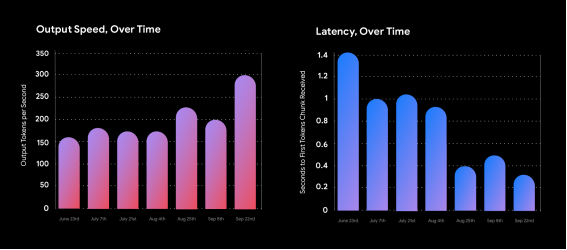

To make it easier for developers to build with Gemini, the paid tier rate limits for 1.5 Flash have increased to 2,000 RPM, and 1.5 Pro's paid tier rate limits increased to 1,000 RPM (up from 1,000 and 360, respectively). In addition to core improvements to the latest models, Google has reduced latency and significantly increased output tokens per second through 1.5 Flash, enabling new use cases with the most powerful models.

Key Features of Gemini 1.5 Flash and Pro

Key Features of Gemini 1.5 Flash

- Speed Optimization: Provides rapid performance for high-volume, high-frequency tasks.

- Multi-Modal Reasoning: Capable of processing and analyzing various types of data (such as text, images, and videos).

- Integration with Google Cloud Console: Offers a seamless and efficient environment for application deployment and management.

Key Features of Gemini 1.5 Pro

1.Enhanced Functionality:

- Provides a longer context window.

2.Reasoning Capabilities:

- Processes and analyzes more information.

- Performs more comprehensive and detailed reasoning.

3.Integration with AI Studio:

- Enhances the capabilities of Gemini 1.5 Pro.

- Allows developers to build and deploy AI applications using this platform.

- Supports the creation of complex AI models.

4.Ethical Standards:

- Emphasizes ethics and morality.

- Offers extensive ethical features to ensure responsible AI development and application.

Gemini 1.5 Pro demonstrates its powerful capabilities and immense potential in handling complex tasks and developing advanced AI applications.

How to Choose Between Gemini 1.5 Flash and Gemini 1.5 Pro

The best choice between Gemini 1.5 Flash and Gemini 1.5 Pro depends on your specific requirements:

- For Complex Tasks Requiring High Attention to Detail: If you are interested in solving difficult problems and obtaining excellent outputs, it is best to use Gemini 1.5 Pro.

- For Faster, More Cost-Effective, Less Complex Tasks: When quick answers and value for money are the top priorities, Gemini 1.5 Flash may be more suitable.

Gemini 1.5 continuously integrates the latest innovations in machine learning and artificial intelligence, ensuring it remains at the forefront of AI technology and delivers cutting-edge performance and features.

Frequently Asked Questions

How to Start Using Gemini 1.5?

To start using Gemini 1.5, you can access it through the Gemini API, Google AI Studio, and Vertex AI. Gemini Live offers real-time interactive experiences, while Google Cloud Console allows you to manage and deploy models. You can interact with Gemini 1.5 using JSON modes and leverage its features in your applications. Gemini 1.5 is also compatible with Open Models and can be used in various chat applications.

Is Gemini 1.5 Compatible with Older Operating Systems?

Yes, Gemini 1.5 is designed to be compatible with older operating systems. Its advanced technology ensures smooth operation across various platforms, allowing users with older systems to experience new features and enhancements without compatibility concerns.

Conclusion

In conclusion, the release of Gemini 1.5 showcases the perfect blend of cutting-edge technology and user-centric design. The evolution from Flash to Pro brings enhanced features to meet diverse user needs. Looking forward, Gemini 1.5 paves the way for a more innovative and user-friendly AI experience, with improved access to next-generation technology.

Whether it’s writing, communication, or boosting productivity, XXAI can provide comprehensive support. Experience it now and enhance your work efficiency!