AI Model Selection 101: A 2024 Guide for Beginners and Experts Alike

Introduction

Within the fluid realm of artificial intelligence (AI), the decision to choose the most appropriate AI model for your precise application is a critical juncture that can significantly steer the success of your project. This comprehensive guide is your navigational tool, directing you through the multifaceted journey of selecting among the elite AI models such as GPT-4, Claude, and other large language models (LLMs) in the current year, 2024.

- "AI Models: Enhancing Efficiency and Creativity" - Offering a closer look at how AI models are enhancing efficiency and fostering creativity in different industries.

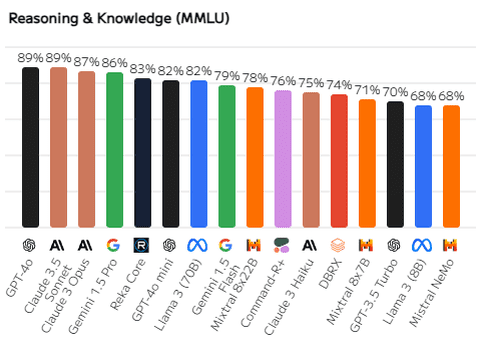

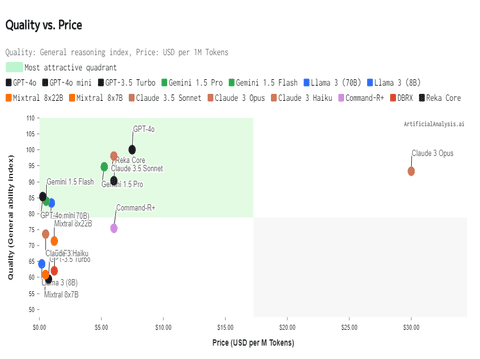

Quality vs. Price

A balance between quality and price is often sought after. The following chart maps the general reasoning index against the price, highlighting the most attractive quadrant.

Quality vs. Output Speed

Understanding the trade-off between quality and output speed is essential for selecting the right AI model. The chart below represents this relationship.

For an in-depth analysis of the trade-offs between quality and output speed in AI models, check out this insightful resource: Quality vs. Output Speed.

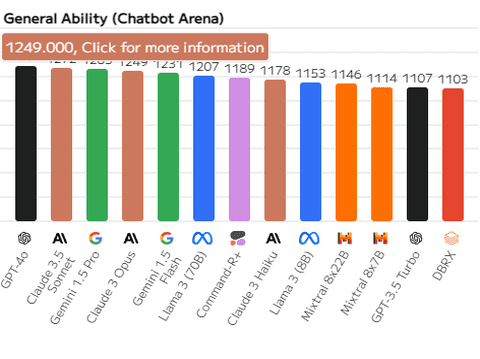

General Ability

The overall general ability of AI models, as measured by the ChatbotArena benchmark, provides a comprehensive view of their capabilities:

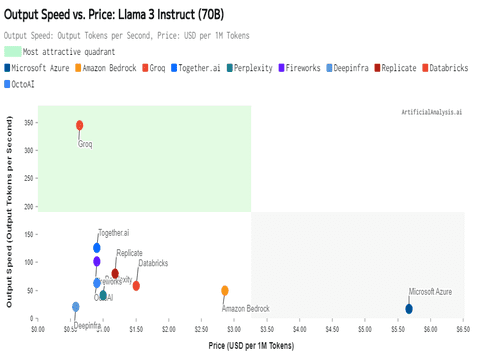

Output Speed vs. Price

For applications requiring high-speed processing, the relationship between output speed and price is critical. The following chart illustrates this for the Llama 3 Instruct (70B) model.

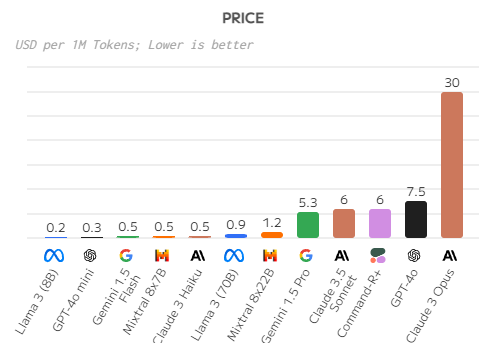

Pricing Analysis

A detailed pricing analysis for the Llama 3 Instruct (70B) model, considering both input and output prices, provides further insights into cost-effectiveness.

Conclusion

While XXAI offers a comprehensive solution integrating multiple top-tier models, the optimal choice depends on your specific use case, technical requirements, and organizational constraints. As AI continues to evolve, we anticipate:

- Increased focus on efficient, smaller models

- Advanced multi-modal capabilities

- Greater emphasis on ethical AI and interpretability

For a deeper understanding of AI models and their capabilities, consider watching the following insightful YouTube videos:

- "Exploring AI Models: Innovations and Applications" - This video delves into the latest innovations in AI models and their applications across various fields.

In short, as a growing product, XXAI proudly serves a diverse range of customers from various industries, including:

- Content creation agencies

- Educational institutions

- Research organizations

- Tech companies

- Marketing firms

Users choose XXAI for its flexibility and multifunctionality. It integrates with various platforms and applications, offering a unified workspace for all writing-related tasks. The dual AI model approach allows for smart adaptability, and the cross-platform synchronization ensures a consistent experience across devices.